Released Chainer/CuPy v4.0.0

We have released Chainer and CuPy v4.0.0 today! This is a major release that introduces several new features, especially for accelerating deep learning computations and making the installation process easier. The following is a selected list of updates (full updates can be seen in the release notes: Chainer, CuPy). Note that some of these updates are also backported to v3 series.

- iDeep support.

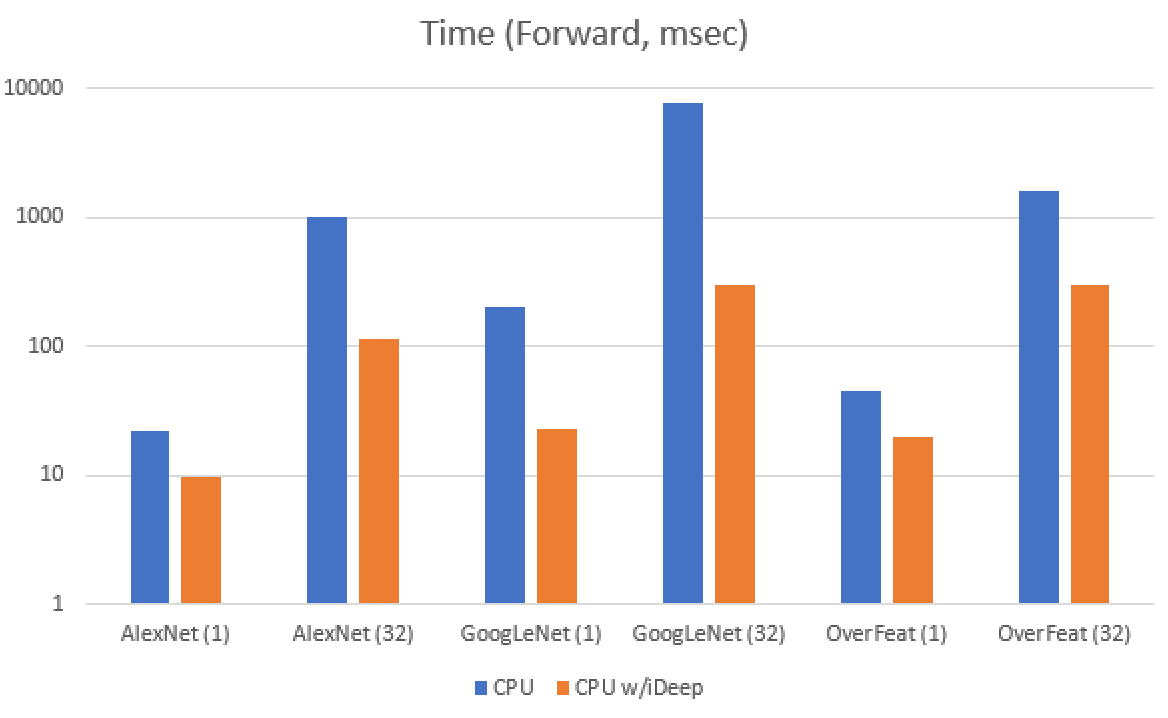

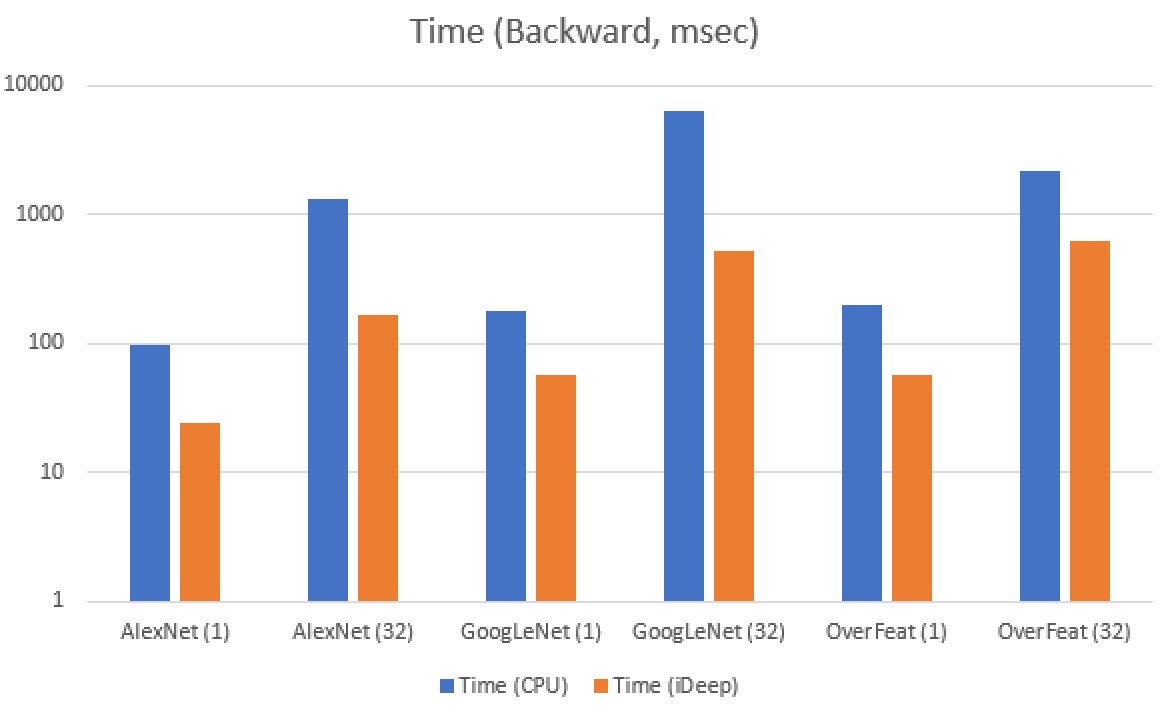

iDeep is a package that provides DNN standard routines optimized for Intel Architecture.

Chainer starts supporting these routines in many functions.

The following figures show the benchmark results on various convolutional networks with batch size 1 and 32.

To use this feature, install

To use this feature, install ideep4pywith pip and set an environment variableCHAINER_USE_IDEEP=auto. More tips are found here. - Wheel packages for CuPy.

The installation is much faster than the conventional sdist package.

Currently, different packages are provided for each CUDA version:

cupy-cuda80,cupy-cuda90, andcupy-cuda91. Note that cuDNN and NCCL are bundled into these packages, so you do not need to prepare them anymore. You still have to install CUDA by yourself and set paths correctly. - cuDNN convolution autotuner.

The autotuner automatically selects the fastest convolution algorithm provided by the cuDNN API in the current environment.

To use this feature, set

chainer.config.autotune = True. - Better FP16 training support.

Training with

float16sometimes requires extra techniques to deal with low precision computation, which are summarized in Mixed Precision User Guide by NVIDIA. Chainer v4 provides these techniques:- TensorCore is automatically used if available,

- loss scaling is enabled by passing

loss_scaleoption to optimizers, and float32master weight is used once you callOptimizer.use_fp32_update.

- Caffe export (experimental). Links that use supported functions can now be exported to Caffe format. This feature makes it easy to deploy your models. Note that you can also export your model to ONNX format with onnx-chainer.

- More double backprop. Most functions are rewritten in a new-style way that supports double backprop.

- NCCL2 is now supported.

- Documentation is reorganized.

Sequentialclass is added as an experimental feature. This class makes it easy to define a sequential network.

We recommend you to update to the latest version of Chainer and CuPy.

You can find the upgrade guide here.

If you want to switch to the wheel package of CuPy, you first have to uninstall the current version and then install the wheel version.

Updating Chainer should be done as usual with the command pip install -U chainer.

We appreciate any feedback. You can ask questions or leave comments at gitter, Slack, Google Groups, and StackOverflow.

The major release also means that the development of the next version has started. We are currently planning following features for Chainer and CuPy v5 (not exclusive, not a promise).

- Static subgraph caching and optimization

- Baseline experiment code generator

- FP16 mode

- NumPy-compatible interface for

chainer.Variable - ChainerMN integration

- TensorComprehensions support in CuPy

- AMD GPU support in CuPy via HIP/ROCm

We hope this major update accelerates machine learning research.

About Chainer

Chainer is a Python-based, standalone open source framework for deep learning models. Chainer provides a flexible, intuitive, and high performance means of implementing a full range of deep learning models, including state-of-the-art models such as recurrent neural networks and variational autoencoders.

Recent Posts

- Chainer/CuPy v7 release and Future of Chainer

- Chainer/CuPy v7のリリースと今後の開発体制について

- Sunsetting Python 2 Support

- Released Chainer/CuPy v6.0.0

- ChainerX Beta Release

- Released Chainer/CuPy v5.0.0

- ChainerMN on AWS with CloudFormation

- Open source deep learning framework Chainer officially supported by Amazon Web Services

Categories

- General (12)

- Announcement (10)